Written by Liberty Munson, Director of Psychometrics, Microsoft

I’m the Director of Psychometrics at Microsoft for our technical credentialing program. The ITCC asked me to write a blog on psychometrics because I’m a bit strange in that I love all things measurement, including exams, assessments, tests… whatever you want to call them. I basically can’t stop talking about it! Well-designed evaluations really are a thing of beauty because they can help you identify your strengths and weaknesses with a surprisingly small number of well-crafted questions. Granted, it is rare to see this in the wild, but knowing it’s possible is fascinating to me. For the most part, the exams you took in school were not well crafted or well designed and likely were not psychometrically sound, but don’t think about that too much.

Because psychometrics makes meaningful evaluation possible, it’s worth understanding the basics. Psychometrics (when applied appropriately) results in valid, reliable, fair, and meaningful outcomes. I am going to take you on a journey to do just that. But even with a focus on the basics, there is much to cover in order to paint the picture of the wonder that is psychometrics—hence the two part series. I hope you’ll join me on this journey!

Let’s start with a definition. At its core, psychometrics is about measuring the right skills in the right way so that we can make an inference about the level of those skills based on the outcome of some type of evaluation (exam, assessment, actions performed, etc.). Because this evaluation has many different names—exam, assessment, measurement, test, etc., to simplify this, I’m going to refer to them collectively as “evaluations” or the “evaluation process.” The meaning of each of these terms varies, not only within industry (i.e., in credentialing, an exam is generally defined as a rigorous evaluation process not explicitly tied to training whereas an assessment is the evaluation that follows a training activity with the express purpose of assessing the acquisition of the learning objectives), but across industry as well (e.g., in selection testing, the evaluation is more often referred to as an assessment, although it is rarely, if ever, tied to a training event or a test). I am also going to use the word “question” to collectively refer to the part of the evaluation that is scored, although in the testing industry, we more commonly refer to these as “items” to reflect the fact that it’s not limited to questions. This includes questions of all types (closed ended, open ended, multiple choice, essay, etc.), activities or tasks performed, and so on.

To say that someone has achieved a certain level of competence in a specific content domain based on the result of an evaluation, the evaluation must be designed, developed, delivered, and maintained based on best practices that are defined by measurement theory. Measurement theory informs us how to structure the questions, how to evaluate skills, and the statistical analyses that should be used to evaluate the quality of the evaluation process. These practices have been identified and refined through decades of scientific research. Measurement theory is the heart of psychometrics.

Many people assume that psychometrics is about data analysis and statistics, but it’s so much more than that.

Psychometrics includes the process and decisions that surround the design, development, delivery, and maintenance of the evaluation process. Every decision made about an evaluation process is a psychometric decision because each decision influences the inferences that you can make about someone’s competence based on the result of that evaluation. How you select subject matter experts (SMEs), how many SMEs you use, how you train the SMEs, how you identify item writers, the item types you decide to use, the number of beta participants you require before conducting your statistical analysis, the approach that you use to establish the passing score, how often you revisit the job task analysis or review the content domain…all of these are psychometric decisions that have an impact on the quality of the measurement process, and as a result, the quality of the inference you can draw based on the results of the evaluation process.

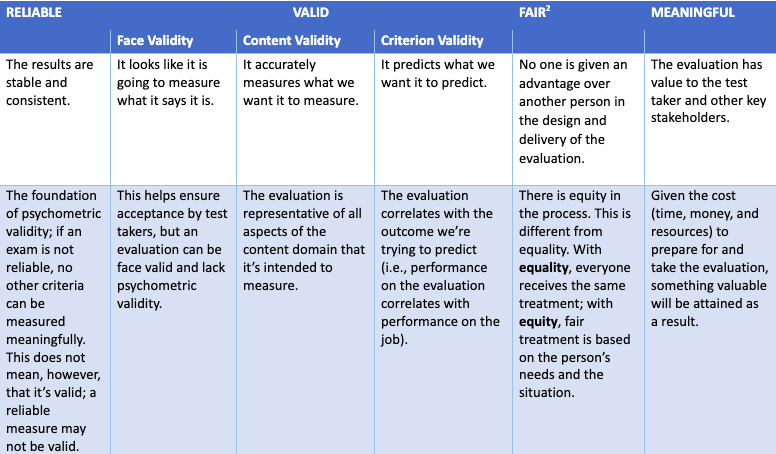

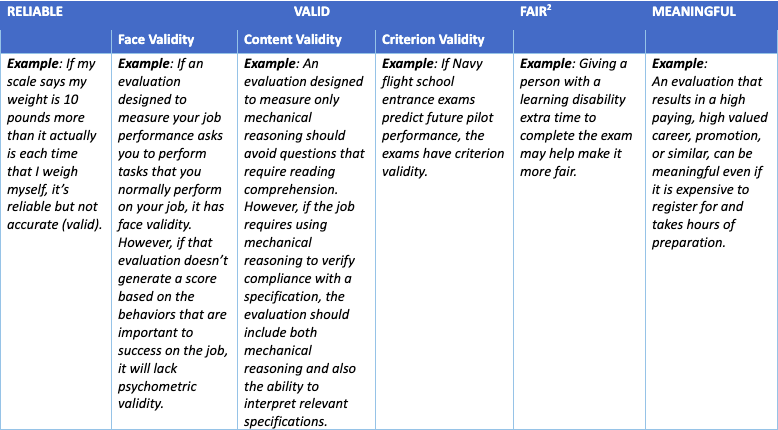

The core tenet of psychometrics is to ensure that an evaluation is valid, reliable, fair, and meaningful.

- Valid: Does the evaluation accurately measure what it is intended to measure?

- Reliable: Is the result repeatable? All things being equal, if the same person takes the evaluation multiple times, do they obtain similar results?

- Fair: Is everyone who takes the evaluation held to the same criterion? Does everyone have the same opportunity (equity rather than equality) to demonstrate their level of competence?

- Meaningful: Is the outcome of the experience meaningful for the person who completed the measurement process and for key stakeholders?

The table below illustrates how these concepts relate to each other and introduces three types of validity, including one—face validity—that is important to test taker acceptance but does not guarantee psychometric validity.

(2) I’m defining fairness more broadly than it’s traditionally defined in psychometrics because it’s important to understand the difference between equity and equality. Strictly speaking, fairness is the removal of bias, or anything that introduces error in measurement.

(3) The content in this table is modified from the 2022 ITCC resource Certifications and Learning Assessments: What are the Differences?

This is a good start on our journey to learn the basics of psychometrics. I hope you join me for Psychometrics 101 Part 2: Psychometrically Sound Evaluations, where we explore how psychometrically sound measurements are designed, developed, delivered, and maintained in such a way that the inferences based on their outcome are fit for their purpose.